There’s a good chance that if you have ever looked after a production SQL environment that you may have come across replication before, this technology is incredibly handy for getting data from A to B (or C, D etc), one of the best ways I’ve heard someone describe this tech is – “oh, so it’s copy and paste then?” and yes, in a way it is!

One of the big bug bares of this technology for me is its maintenance, there’s a job added after the initial setup which is due to run every 15 minutes and clears up any commands/transactions which match the criteria you’re looking for, in most cases these will be defaulted to 0 and 72 (@min_distretention and @max_distretention respectively). Recently we’ve had issue with this job which is that its run-time both far exceeds its schedule and that it causes insane amounts of blocking which consequentially takes all scheduler time meaning that no new rows can be taken from the logreader and added to the distributor DB – not ideal! If you do a quick scan around the internet for any information on how to reduce your distributor size or how to increase your throughput you’ll see a lot of mention for the option immediate_sync, massive warning here, if you have more than one publication on one database and you have a mix of this flag and different types on replication (snapshot and transaction in my case) this will break the clean-up job without telling you that it’s actually broken…! What will end up happening is that you’ll have loads of rows in your DB which aren’t being removed past their retention period.

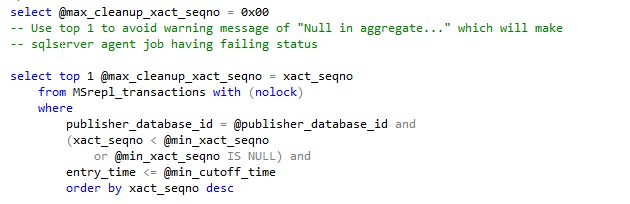

So, why does this cause an issue? Well to get to the bottom of that you’ll need to dig into Microsoft code. Thankfully, since the distribution DB is a system DB which acts more like a user DB you can actually read through all the code and create your own versions of the procs (if you really want). The issue comes from the following proc which is about 3 levels down into the clean-up sproc.

At this point during the clean-up process the code is in a cursor which is looking to obtain @max_cleanup_xact_seqno, this tells the clean-up job what the last seqno is that it can clean up till, this is based on the time parameters which you pass to the clean-up job, as mentioned earlier these are usually 0 and 72 which are translated as hours. What was happening in my environment was that 0x00 was being returned, which was causing the next proc in the chain (delete of commands & trans) to not process anything, as the code at the bottom of the proc states

But why would it return 0x00 (effectively NULL going off the comments)? Well, this is to do with the declared cursor at the top of this sproc, if you have any immediate_sync = false publications this will enter a loop for each agent which exists pulling out the minimum xact sequence number for each agent, this later on affects the final set of the @max_cleanup_xact_seqno = xact_seqno as the minimum for this publisher DB (ID 1 in my case) is not related at all to the immediate_sync = true publications, their minimum sequence numbers are way off vs the rest of my publications for the DB. In my opinion this is a massive bug, if you read the advice online and switch a few of your articles immediate_sync settings but not the rest (test the water or something) you may well end up with a very large DB, very quickly! In my case we were at the billion row mark before I found this! Be cautious!

It’s also worth noting there are some big problems in the way this procedure runs which can cause you some big issues when running, I’d personally recommend a few tweaks to help your system run;

Change (or create a new proc) for dbo.sp_MSsubscription_cleanup (I called all mine dbo.sp_[whatever]_MAXDOP) and set

* REPETABLE READ to NOLOCK on the first update statement

* Set the query to have OPTION(MAXDOP 1), this stops blocking and ensures you’re not killing your server for resource – this is maintenance after all.

Cheers,

Rik